ACKNOWLEDGEMENTS

The

time I had at USC during the Ph.D. route was a very enriching period of my

life. I had the opportunity to work in an exciting and stimulating research

environment, led by Michael Arbib.

I

would like to present my deepest gratitude to The Scientific and Technical

Research Council of Turkey (TUBITAK) for providing me the scholarship that made

it possible for me to attempt and complete the Ph.D. study presented in this

thesis. The study would not have been possible if TUBITAK did not provide

support for the very first semester and the final semester at USC.

I

would like to thank Michael Arbib for guiding and educating me throughout the

my years at USC. He has been an extraordinary advisor and mentor, whom I owe

all the brain theory I learned. I would also like to present my

gratitude to Michael Arbib for providing support via HFSP and USCBP grants.

Without HFSP and USCBP support, this study would not be possible.

Stefan

Schaal is a great mentor, who has been a constant support and source of

inspiration with never-ending energy. Besides introducing me to Robotics, he

and his colleague, Sethu Vijayakumar were very influential in maturating the

concept of machine learning in my mind.

I

also owe a great debt of gratitude to Nina Bradley for being a source positive

energy and mentoring me especially in infant motor development. Without

her, the thesis would be lacking a major component.

I

am also full of gratitude to my Ph.D. qualification exam comittee members Maja

Mataric and Christoph von der Malsburg for their guidance and support.

I

am greatly indebted to Giacomo Rizzolatti

for enabling me to visit his lab and providing the opportunity to

communicate with not only himself but

also with Vittorio Gallese and

Leonardo Fogassi who have provided

invaluable

insights about mirror neurons. In addition, I am very thankful to

Massimo Matelli

and Giuseppe Luppino,

for the first hand information on the

mirror neuron system connectivity, and to Luciano Fadiga for stimulating

discussions. I would also like to thank to Christian Keysers and Evelyne

(Kohler) for not only actively involving me in their recording sessions but

also offering their sincere friendship.

I

am very thankful to Hideo Sakata for giving me the opportunity to visit his lab

in Tokyo and interact with many researchers including Murata-san with whom I

had very stimulating discussions.

I

am deeply thankful to Mitsuo Kawato, for giving me the opportunity to interact

with various researchers in Japan by having me at ATR during the summer of

2001. My research experience at ATR was very rewarding; I greatly expanded my

knowledge on motor control and motor learning. I would like to salute the staff

at ATR for all their help. I also would like to present my thanks to the

friends at ATR for welcoming me and making me feel at home.

I

would like to present my appreciation and thanks to my mentors at Middle East

Technical University in Turkey. I present my sincere thanks to my masters

advisor Marifi Guler for introducing me to neural computation and to Volkan

Atalay for introducing me to computer vision, and supporting my Ph.D. application.

Especially, I would like to present my gratitude to Fatos Yarman Vural for her

guidance and support during my Masters study and for preparing me for the Ph.D.

work presented in this dissertation. Other influential Computer Science

professors to whom I am grateful for educating me are Nese Yalabik, Gokturk

Ucoluk and Sinan Neftci.

I

would like to present my gratitude to Tosun Terzioglu, Albert Ekip, Turgut

Onder and Semih Koray who were professors of the Mathematics Department at the

Middle East Technical University. They taught me how to think ‘right’ and

exposed the beauty of mathematics to me.

Throughout

these six years at USC, I had the pleasure to meet several valuable people who

contributed to this dissertation. Firstly, I am very thankful to Aude Billard

and Auke Jan Ijspeert for all their support and scientific interaction and

feedback. They have a huge role in helping me get through the tough times

during my Ph.D. work. In addition, I would like to thank Aude Billard for

providing me the computing hardware and helping me have a nice working

environment, which was very essential for the progress of my Ph.D. study. I am

thankful to my great friend Sethu Vijayakumar for his support and stimulating

discussions. Jun Nakanishi, Jun Mei Zu, Aaron D’Souza, Jan Peters and Kazunori

Okada besides being of great support, were always there to discuss issues and

helped me overcome various obstacles. I am deeply thankful to Shrija Kumari for

offering not only her smile and friendship but also her energy to proofread my

manuscript. I owe a lot to Jun Mei Zu: she has always been there as a great

friend and has always offered her help and support when I needed it most. I am

indebted to my ex-officemate and a very valuable friend Mihail Bota for his

constant support and interactions for improving the thesis and providing me the

psychological support to overcome many obstacles throughout my Ph.D. years.

Finally, I would like to thank Juan Salvador Yahya Marmol for being a good

friend and sharing my workload during various periods of my Ph.D. I count

myself very lucky to have these great friends and colleagues whom once again I

present my gratitude: Thank you guys!

Not

a Hedco Neuroscience inhabitant but a very valuable friend, Lay Khim Ong was

always there for offering her help both psychologically and physically (Hey

Khim: thank you for your great editing!). I would like to thank other great

friends who supported me (in spite of my negligence in keeping in touch with

them). Kyle Reynolds, Awe Vinijkul, Aye

Vinijkul Reynolds, Alper Sen, Ebru Dincel: please accept my sincere thanks and

appreciation.

I

owe deep gratitude to my wife Mika Satomi for her patience in dealing with me

in difficult times. She was always

there. Her contribution to this thesis is indispensable. I especially celebrate

and thank her for the artistic talents and hard work that she generously

offered me throughout the Ph.D. study.

I

am greatly indebted to Paulina Tagle and Laura Lopez for their support and help

over all these years. I also would like to thank Laura Lopez and Yolanda Solis

for their kind friendship and support. My gratitude to Luigi Manna, who helped

me with the hardware and software issues during the Ph.D. study.

I

would like to thank Laurent Itti for his generous help for improving our lab

environment and providing partial support for my research. Also, I would like

to salute his lab members for their support and friendship. Florence Miau, in

particular, had always offered her warm friendship during her internship at

USC.

I

would like to present my appreciation to the good things in life particularly,

I would like to thank the ocean for comforting and rejuvenating me during

difficult times.

Finally,

I am deeply indebted to my family, to whom I owe much more than what can be

expressed. This work would not be possible without the help of my parents.

(Anne ve Baba, Evrim ve Nurdan: Hersey icin cok cok tesekkurler!)

LIST OF FIGURES

Figure 2.1 Lateral view of macaque brain

showing the areas of agranular frontal cortex and posterior parietal cortex

(adapted from Geyer et al. 2000). The naming conventions: frontal regions,

Matelli et al.(1991); parietal regions, Pandya and Seltzer (1982) 5

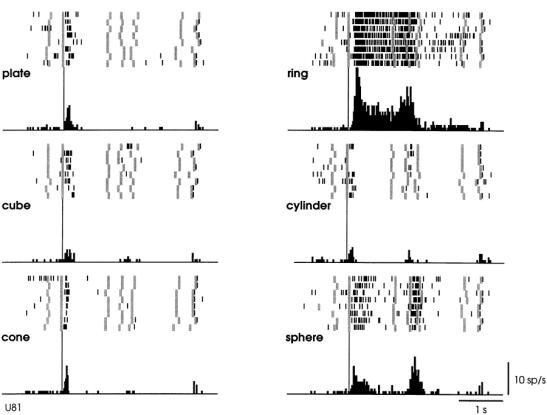

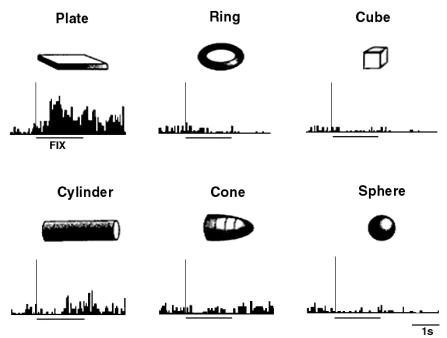

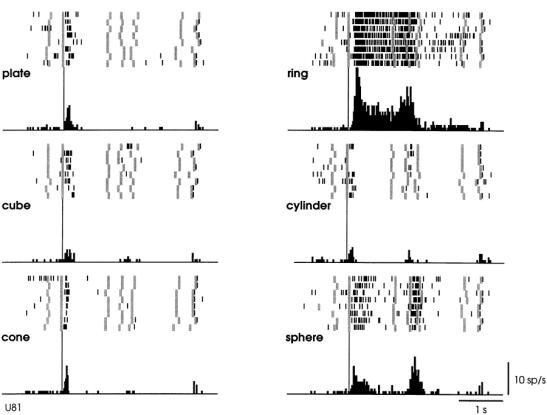

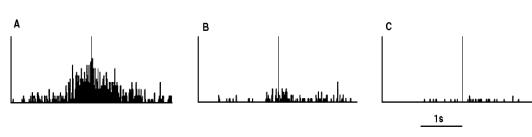

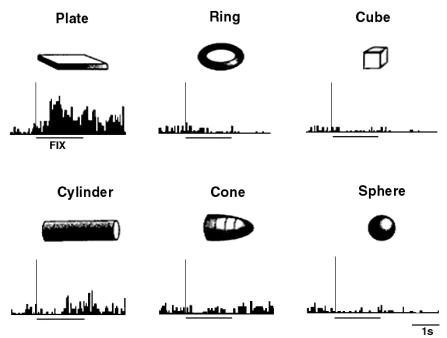

Figure

2.2 A canonical neuron response during grasping of various objects in the dark

(left to right and top to bottom: plate, ring, cube, cylinder, cone and sphere.

The rasters and histograms are aligned with object presentation. Small grey

bars in each raster marks onset of key press, go signal, key release, onset of

object pulling, release signal, and object release, respectively. The peaks in

ring and sphere object cases correspond to the grasping of the object by the

monkey (adapted from Murata et al. 1997a) 6

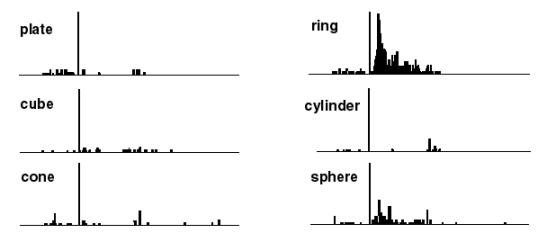

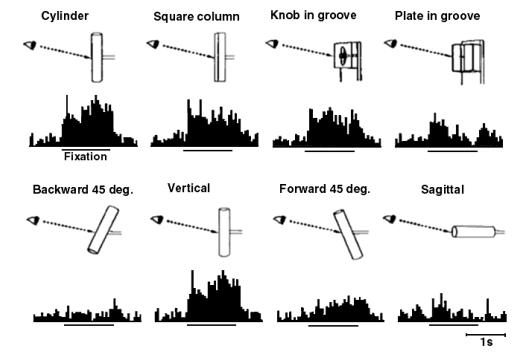

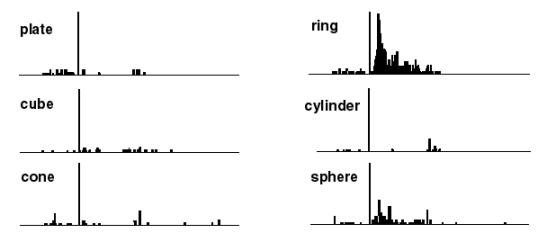

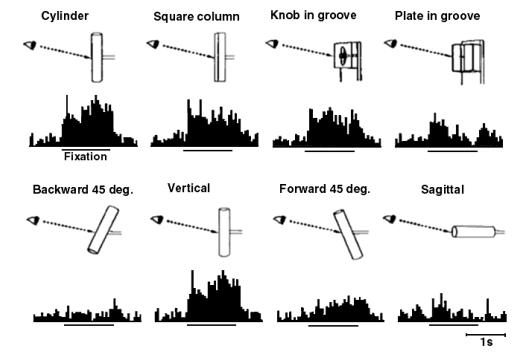

Figure

2.3 The motor responses of the same neuron shown in Figure 2.2. The motor

preference of the neuron is also carried over to the visual preference (compare

the ring and sphere histograms of both figures) (adapted from Murata et al.

1997a) 7

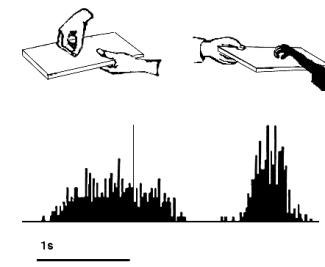

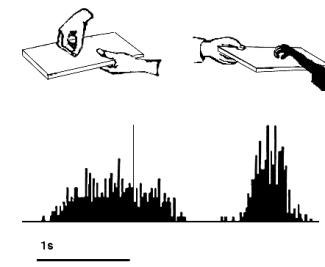

Figure

2.4 Activity of a cell during action observation (left) and action execution

(right). There is no activity in presentation of the object during both initial

presentation and bringing the tray towards the monkey. The vertical line over

the histogram indicates the hand-object contact onset. (from Gallese et al.,

1996). 8

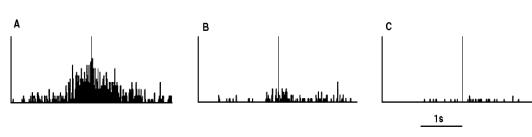

Figure

2.5 Visual response of a mirror neuron. A. Precision grasp B. power grasp C.

mimicking of precision grasp. The vertical lines over the histograms indicate

the hand-object contact onset. (adapted from Gallese et al., 1996) 9

Figure 2.6 Example

of a strictly congruent manipulating mirror neuron: A) The experimenter

retrieved the food from a well in a tray. B) Same action, but performed by the

monkey. C) The monkey grasped a small piece of food using a precision grip. The

vertical lines over the histograms indicate the hand-object contact onset

(adapted from Gallese et al., 1996). 9

Figure 2.7 The

classification of area F5 neurons derived from published literature

(Dipellegrino et al. 1992; Gallese 2002; Gallese et al. 1996; Murata et al.

1997a; Murata et al. 1997b; Rizzolatti et al. 1996a; Rizzolatti and Gallese

2001). All F5 neurons fire in response to some motor action. In addition,

canonical neurons fire for object presentation while the mirror neurons fire

for action observation. The majority of hand related F5 neurons are purely

motor (Gallese 2002)(labelled as Motor Neurons in the figure) 10

Figure

2.8 The macaque parieto-frontal projections from mesial parietal cortex, medial

bank of the intraparietal sulcus and the surface of the superior parietal

lobule (adapted from Rizzolatti et al. 1998). Note that the Brodmann’s area 7m

corresponds to Pandya and Seltzer's (1982) area PGm... 11

Figure

2.9 The intraparietal sulcus opened to show the anatomical location of AIP in

the macaque (adapted from Geyer et al. 2000) 13

Figure

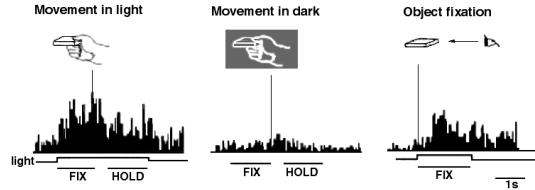

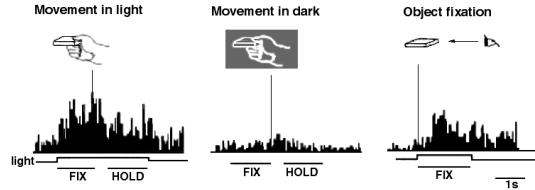

2.10 An AIP visual-dominant neuron activity under three task conditions: Object

manipulation in the light, object manipulation in the dark and object fixation

in the light. The neuron is active during fixation and holding phase when the

action is performed in light condition. However, during grasping in dark the

neuron shows no activity. The fixation of the object alone without grasping

also produces a discharge (adapted from Sakata et al. 1997a) 14

Figure

2.11 Activity the same neuron in Figure

2.10 during fixation of different objects. The neuron show selectivity

for horizontal plate (adapted from

Sakata et al. 1997a) 14

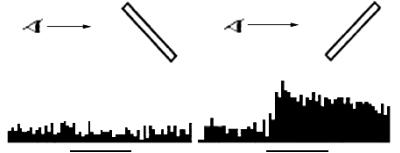

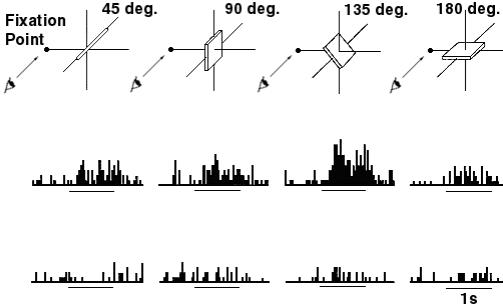

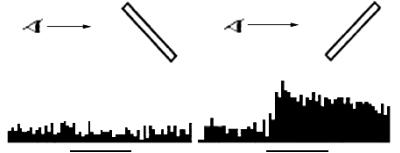

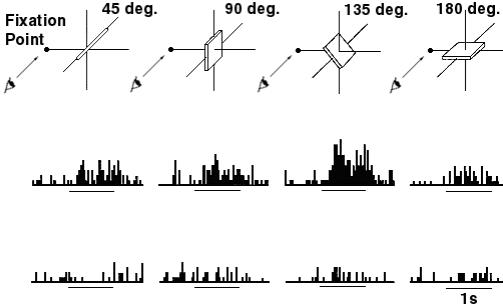

Figure

2.12 An AIP visual-dominant neuron’s axis orientation tuning and object

fixation response is shown. The neuron fires maximally during the fixation of a

vertical bar or a cylinder. The tuning is demonstrated in the lower half of the

figure (adapted from Sakata et al. 1999) 15

Figure

2.13 Response of an axis-orientation-selective (AOS) neuron in the caudal part

of the lateral bank of the intraparietal sulcus (c-IPS) to a luminous bar

tilted 45° forward (left) or 45 backward (right) in the sagittal plane. The

monkey views the bar with

binocular vision. The line segment under the histograms mark the fixation

start and the period of 1 second.

(adapted from Sakata et al. 1999) 16

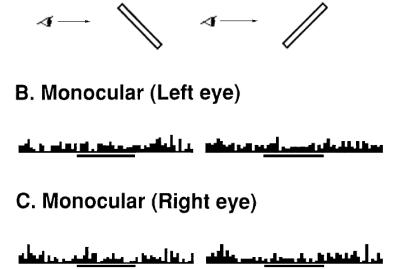

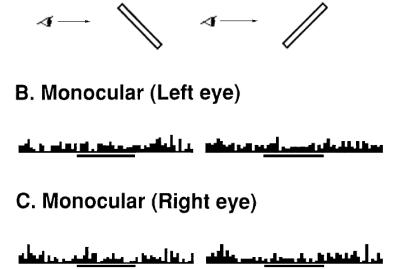

Figure

2.14 The response of the same neuron in Figure 2.13, for monocular vision

conditions for the left and right eyes. (adapted from Sakata et al. 1999) 16

Figure 2.15 Orientation

tuning of a surface-orientation selective (SOS) neuron. First row: Stimuli

presented. Middle row: responses of the cell with binocular view. Last row:

responses of the cell with monocular view (adapted from Sakata et al. 1997a) 17

Figure

2.16 The reconstructed connectivity of area 7a. The thickness of the arrows

represent the strength of the connection. (adapted from Bota 2001) 20

Figure

2.17 The reconstructed connectivity of area 7b. The thickness of the arrows

represent the strength of the connection. (adapted from Bota 2001) 21

Figure

2.18 The reconstructed connectivity of area AIP. The thickness of the arrows

represent the strength of the connection. (adapted from Bota 2001) 22

Figure

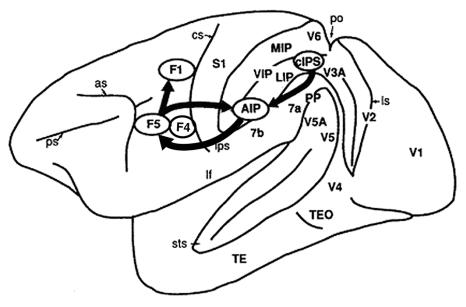

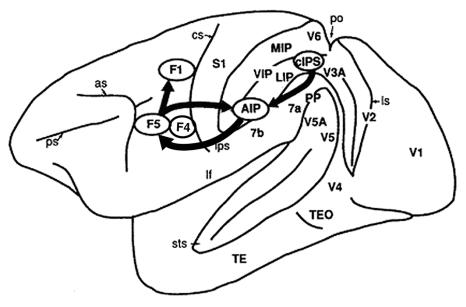

3.1 Lateral view of the monkey cerebral cortex (IPS, STS and lunate sulcus

opened). The visuomotor stream for hand action is indicated by arrows (adapted

from Sakata et al., 1997) 27

Figure

3.2 AIP extracts the affordances and

F5 selects the appropriate grasp from the AIP ‘menu’. Various biases are sent

to F5 by Prefrontal Cortex (PFC) which relies on the recognition of the object

by Inferotemporal Cortex (IT). The dorsal stream through AIP to F5 is

replicated in the MNS model 28

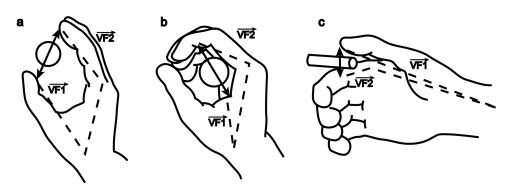

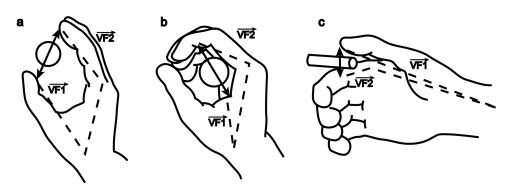

Figure

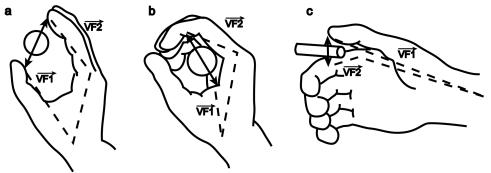

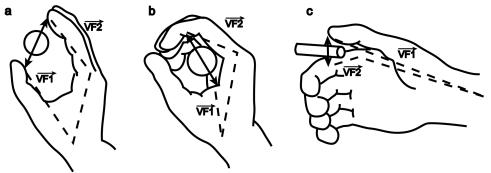

3.3 Each of the 3 grasp types here is defined by specifying two "virtual

fingers", VF1 and VF2, which are groups of fingers or a part of the hand

such as the palm which are brought to bear on either side of an object to grasp

it. The specification of the virtual fingers includes specification of the

region on each virtual finger to be brought in contact with the object. A

successful grasp involves the alignment of two "opposition axes": the

opposition axis in the hand joining

the virtual finger regions to be opposed to each other, and the opposition axis in the object joining

the regions where the virtual fingers contact the object. (Iberall and Arbib

1990) 29

Figure

3.4 The components of hand state F(t) = (d(t),

v(t), a(t), o1(t), o2(t), o3(t), o4(t)).

Note that some of the components are purely hand configuration parameters

(namely v,o3,o4,a) whereas others are

parameters relating hand to the object. 31

Figure

3.5 The MNS (Mirror Neuron System)

model. (i) Top diagonal: a portion of the FARS model. Object features are

processed by cIPS and AIP to extract grasp affordances, these are sent on to

the canonical neurons of F5 that choose a particular grasp. (ii) Bottom right.

Recognizing the location of the object provides parameters to the motor

programming area F4 which computes the reach. The information about the reach

and the grasp is taken by the motor cortex M1 to control the hand and the arm.

(iii) New elements of the MNS model: Bottom left are two schemas, one to

recognize the shape of the hand, and the other to recognize how that hand is

moving. (iv) Just to the right of these is the schema for hand-object spatial

relation analysis. It takes information about object features, the motion of

the hand and the location of the object to infer the relation between hand and

object. (v) The center two regions marked by the gray rectangle form the core

mirror circuit. This complex associates the visually derived input (hand state)

with the motor program input from region F5canonical neurons during the

learning process for the mirror neurons. The grand schemas introduced in

section 3.2 are illustrated as the following. The “Core Mirror Circuit” schema

is marked by the center grey box; The “Visual Analysis of Hand State” schema is

outlined by solid lines just below it, and the “Reach and Grasp” schema is

outlined by dashed lines. (Solid arrows: established connections; dashed

arrows: postulated connections) 32

Figure

3.6 (a) For purposes of simulation, we

aggregate the schemas of the MNS (Mirror Neuron System) model of Figure

3.5 into three "grand schemas" for Visual Analysis of Hand State,

Reach and Grasp, Core Mirror Circuit. (b) For detailed analysis of the Core

Mirror Circuit, we dispense with simulation of the other two grand schemas and

use other computational means to provide the three key inputs to this grand

schema. 34

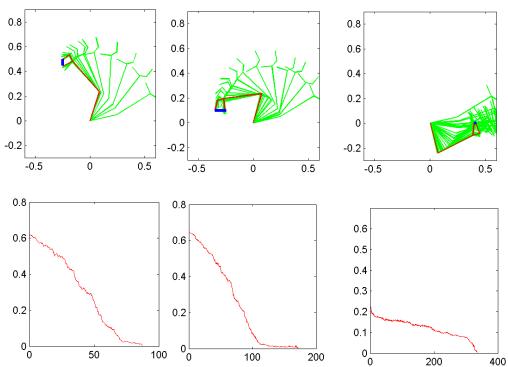

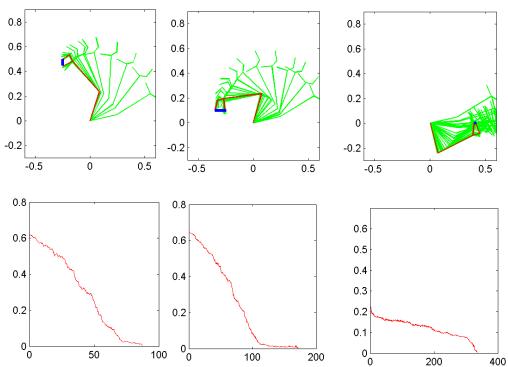

Figure

3.7 (Left) The final state of arm and hand achieved by the reach/grasp

simulator in executing a power grasp on the object shown. (Right) The hand

state trajectory read off from the simulated arm and hand during the movement

whose end-state is shown at left. The hand state components are: d(t), distance

to target at time t; v(t), tangential velocity of the wrist; a(t), Index and

thumb finger tip aperture; o1(t), cosine of the angle between the object axis

and the (index finger tip – thumb tip) vector; o2(t), cosine of the angle

between the object axis and the (index finger knuckle – thumb tip) vector;

o3(t), The angle between the thumb and the palm plane; o4(t), The angle between

the thumb and the index finger. 37

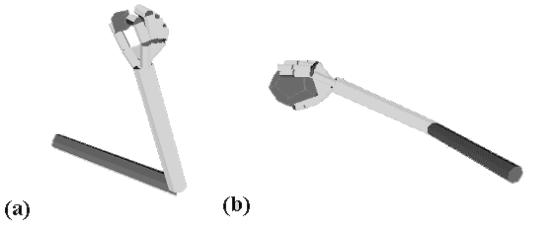

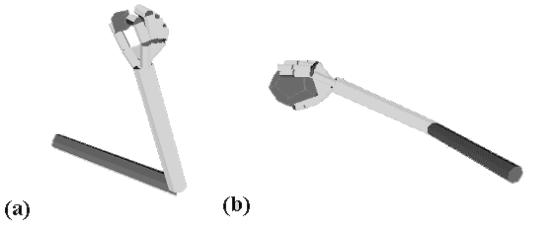

Figure

3.8 Grasps generated by the simulator. (a) A precision grasp. (b) A power

grasp. (c) A side grasp.. 38

Figure

3.9 (a) Training the color expert,

based on colored images of a hand whose joints are covered with distinctively

colored patches. The trained network will be used in the subsequent phase for

segmenting image. (b) A hand image (not from the training sample) is fed to the

augmented segmentation program. The color decision during segmentation is done

by consulting to the Color Expert. Note that a smoothing step (not shown) is

performed before segmentation.. 40

Figure

3.10 Illustration of the model matching system. Left: markers located by

feature extraction schema. Middle and Right: initial and final stages of model

matching. After matching is performed a number of parameters for the Hand

configuration are extracted from the matched 3D model 41

Figure

3.11 The scaling of an incomplete input to form the full spatial representation

of the hand state As an example, only one component of the hand state, the

aperture is shown. When the 66 percent of the action is completed, the

pre-processing we apply effectively causes the network to receive the stretched

hand state (the dotted curve) as input as a re-representation of the hand state

information accessible to that time (represented by the solid curve; the dashed

curve shows the remaining, unobserved part of the hand state) 44

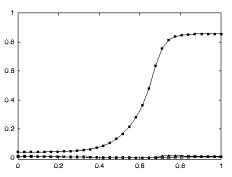

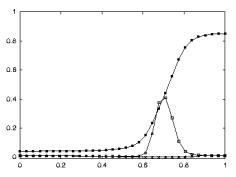

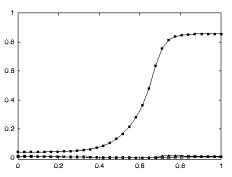

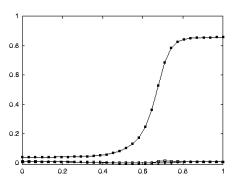

Figure

3.12 The solid curve shows the effective input that the network receives as the

action progresses. At each simulation cycle the scaled curves are sampled (30

samples each) to form the spatial input for the network. Towards the end of the

action the networks input gets closer to the final hand state. 45

Figure

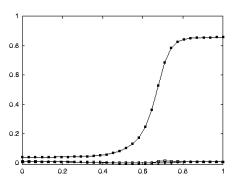

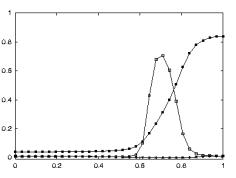

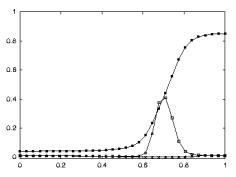

3.13 (a) A single grasp trajectory viewed from three different angles

to clearly show its 3D pattern. The wrist trajectory during the grasp is marked

by square traces, with the distance between any two consecutive trace marks

traveled in equal time intervals. (b)

Left: The input to the network. Each component of the hand state is

labelled. (b) Right: How the

network classifies the action as a power grasp: squares: power grasp output;

triangles: precision grasp; circles: side grasp output. Note that the response

for precision and side grasp is almost zero. 47

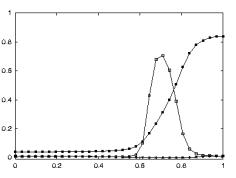

Figure

3.14 Power and precision grasp resolution. The conventions used are as in the

previous figure. (a) The curves for power and precision cross towards the end

of the action showing the change of decision of the network. (b) The left shows

the initial configuration and the right shows the final configuration of the

hand 48

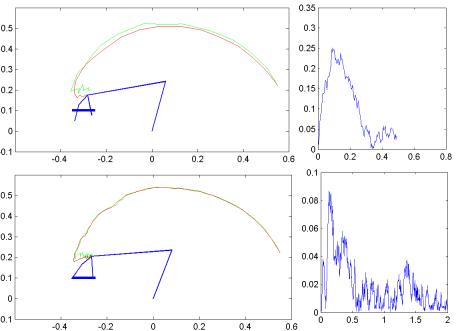

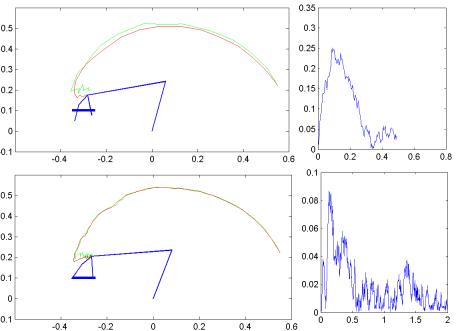

Figure 3.15: (Top) Strong precision grip mirror response for a reaching

movement with a precision pinch. (Bottom) Spatial location perturbation

experiment. The mirror response is greatly reduced when the grasp is not

directed at a target object. (Only the precision grasp related activity is

plotted. The other two outputs are negligible.) 48

Figure

3.16 Altered kinematics experiment. Left: The simulator executes the grasp with

bell-shaped velocity profile. Right: The simulator executes the same grasp with

constant velocity. Top row shows the graphical representation of the grasps and

the bottom row shows the corresponding output of the network. (Only the

precision grasp related activity is plotted. The other two outputs are

negligible.) 49

Figure

3.17 Grasp and object axes mismatch experiment. Rightmost: the change of the

object from cylinder to a plate (an object axis change of 90 degrees).

Leftmost: the output of the network before the change (the network turns on the

precision grip mirror neuron). Middle: the output of the network after the object

change. (Only the precision grasp related activity is plotted. The other two

outputs are negligible.) 50

Figure

3.18 The plots show the level of mirror responses of the explicit affordance coding

object for an observed precision pinch for four cases (tiny, small, medium, big

objects). The filled circles indicate the precision activity while the empty

squares indicate the power grasp related activity.. 52

Figure

3.19 The solid curve: the precision grasp output, for the non-explicit

affordance case, directed to a tiny object. The dashed curve: the precision

grasp output of the model to the explicit affordance case, for the same object. 52

Figure

3.20: Empty squares indicate the

precision grasp related cell activity, while the filled squares represent the

power grasp related cell activity. The grasps show the effect of changing the

object affordance, while keeping a constant hand state trajectory. In each

case, the hand-state trajectory provided to the network is appropriate to the

medium-sized object, but the affordance input to the network encodes the size

shown. In the case of the biggest object affordance, the effect is enough to

overwhelm the hand state’s precision bias. 53

Figure

3.21 The graph is drawn to show the

decision switch time versus object size. The minimum is not at the boundary,

that is, the network will detect a precision pinch quickest with a medium

object size. Note that the graph does not include a point for "Biggest

object" since there is no resolution point in this case (see the final

panel of Figure 3.19) 54

Figure

3.22 The precision grasp action used to test our visual system is depicted by

superimposed frames (not all the frames are shown) 54

Figure

3.23 The video sequence used to test the visual system is shown together with

the 3D hand matching result (over each frame). Again not all the frames are

shown.. 55

Figure

3.24 The plot shows the output of the MNS model when driven by the visual

recognition system while observing the action depicted in Figure 3.22. It must

be emphasized that the training was performed using the synthetic data from the

grasp simulator while testing is performed using the hand state extracted by

the visual system only. Dashed line: Side grasp related activity; Solid line:

Precision grasp related activity. Power grasp activity is not visible as it

coincides with the time axis. 56

Figure

4.1 The elevated circular region corresponds to the area defined by the

equation (x*x+y*y) <0.25. The environment returns +1 as the reward if the

action falls into the circular region, otherwise –1 is returned. 64

Figure

4.2 The adaptation of the firing potential of the stochastic units are shown as

a series of evolving 3D maps. (left to right and top to bottom) 65

Figure

4.3. The normalized histogram of the actions generated over 60000 samples. Note

that the actions generated captured the environment’s reward distribution (see

Figure 4.1). 66

Figure

4.4 The stochastic environment’s double peaked reward distribution (see text

for the explanation) 66

Figure

4.5 Some snapshots showing the phases of learning of the layer in the

stochastic environment where the reward distribution has two peaks (see Figure

4.4). 67

Figure

4.6. The normalized histogram of 60000 data points (actions) generated by the

trained layer in the stochastic environment depicted in Figure 4.4. 68

Figure

5.1 Infant grip configurations can be divided in two categories: power and

precision grips. Infants tend to switch from power grips to precision grips as

they grow (adapted from Butterworth et al. 1997) 73

Figure

5.2 The structure of the Infant Learning to Grasp Model. The individual layers

are trained based on somatosensory feedback. 76

Figure

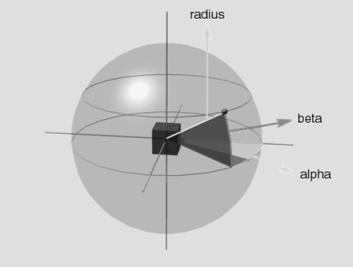

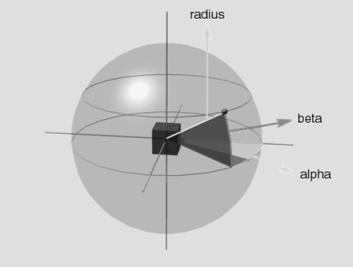

5.3 Hand Position layer specifies the approach direction of the hand towards

the object. The representation is allocentric (centred on the object).

Geometrically the space around the object can be uniquely specified with the

vector (azimuth, elevation, radius). The Hand Position layer generates the

vector by a local population vector computation. The locus of the local

neighbourhood is determined by the probability distribution represented in the

firing potential of Hand Position layer neurons (see Chapter 4, for details) 77

Figure

5.4:The grasp stability we used in the simulations is illustrated for a hypothetical

precision pinch grip (note that this is a simplified, the actual hand used in

the simulations has five fingers) 79

Figure

5.5 The trained model’s Hand Position layer is shown as a 3D plot. One

dimension is summed to reduce the 4D map to a 3D map. Intuitively the map says:

‘when the object is above the shoulder and in front grasp it from the bottom’ 81

Figure

5.6: The output of the trained model’s target position layer is shown as a 3D

plot. One dimension is summed to reduce the 4D map to a 3D map. The object is

on the left side of the (right handed) arm. Intuitively, the map says ‘when the

object is on the left side grasp it from the right side of the object’ 81

Figure

5.7 The learning evolution of the distribution of the Hand Position layer is

shown as a 3D plot. Note that the 1000 neurons shown represent the probability

distribution of approach directions. Initially, the layer is not trained and

responds in a random fashion to the given input. As the learning progresses,

the neurons gain specificity for this object location. 82

Figure

5.8 ILGM planned and performed a power grasp after learning. Note the

supination (and to a lesser extent extension) of the wrist required to grasp

the object from the bottom side. 83

Figure

5.9 Two learned precision grips (left: three fingered; right four fingered) are

shown. Note that the wrist configuration for each case. ILGM learned to combine

the wrist location with the correct wrist rotations to secure the object. 83

Figure

5.10 ILGM was able to generate two fingered precision grips. However these were

less than the three or four finger grips. 84

Figure

5.11 The cube on the table simulation set up. ILGM interacts with the

object with the physical constraint that it has to avoid collision with the

table. 85

Figure

5.12 ILGM learned a ‘menu’ of precision grips with the common property that the

wrist was placed well above the object. The orientation of the hand and the

contact points on the object showed some variability. Two example precision

grips are shown in the figure. 85

Figure

5.13. ILGM acquired thumb opposing index finger precision grips. 86

Figure

5.14 The three cylinder orientations and grasp attempts by the poor vision

condition. 87

Figure

5.15 The orientation match of the hand and the cylinder is illustrated. Dashed

line with diamonds: 5 months old infants; Solid line with diamonds: 9 months

old infants; Dashed line with circles: ILGM with no affordance; Solid line with

circles: ILGM with affordance (infant data from Lockman et al. (1984)). Right

panel illustrates the object orientation used for the simulation and for the

infants in this comparison.. 88

Figure

5.16 The hand orientation and cylinder orientation difference curves for

individual trials. The columns from left to right correspond to horizontal,

diagonal and vertical orientations. The upper row flat class of error

curves, lower row non-flat class for error curves (see text for

explanation) 89

Figure

5.17 The hand orientation and cylinder orientation difference curves while ILGM

was executing four types of grasp in the full-vision condition. Left two

figures are two typical error curves for the horizontal cylinder. Note that the

two horizontal case error patterns reflect the two possible grasps: from the

bottom and from the top. The third and fourth are typical error curves for the

diagonal and vertical cylinders respectively.. 90

Figure

5.18 The grasps performed after ILGM learned the association between the wrist

rotations and the object affordance (orientation) 91

Figure

6.1: The overall MNS model. The grey background rectangle shows the focus of

this chapter. In addition to the areas shown, area F2 will be posited as being

involved in grasp planning. 94

Figure

6.2 Left: precision grasp (pad opposition); Middle: Power grasp (palm

opposition); Right: Side grasp (side opposition). Each of the 3 grasp types

here is defined by specifying two ‘virtual fingers’, VF1 and VF2, which are

groups of fingers or a part of the hand such as the palm which are brought to

bear on either side of an object to grasp it. The specification of the virtual

fingers includes specification of the region on each virtual finger to be

brought in contact with the object. A successful grasp involves the alignment

of two "opposition axes": the opposition

axis in the hand joining the virtual finger regions to be opposed to each

other, and the opposition axis in the

object joining the regions where the virtual fingers contact the object

(adapted from Iberall and Arbib 1990) 95

Figure

6.3 The two possible organization of learning to grasp circuit are shown.

According to Hypothesis I, two grasping circuits exist; the phylogenetically

older one located in area F1 (hatched background) and the newer one in the

premotor cortex (solid background). According to Hypothesis II, F1 is involved

in only executing the premotor cortex instructed movements. LGM is based on the

latter hypothesis. The details of LGM are shown in Figure 6.4. Note that we

introduced area F2 for complementing the MNS structures. The visual input to

area F2 originates from MIP (not shown) and V6a. 98

Figure

6.4 The Learning to Grasp Model. F5 is implicated in all grasp related

parameters. Dashed connections indicate the direct corticospinal projections of

premotor areas. Area F5 works with area F2 and F4 to transform visual

affordances signalled by parietal areas into a grasp plan. The grasp plan is

then, relayed to primary motor cortex (F1) and spinal cord for execution. The

tactile feedback of the action is integrated in the first somatosensory cortex

(SI), which mediates the adaptation of the parietal-premotor and inter-premotor

connections. 100

Figure

6.5 The top-left shows the Hand Position layer output summed over the radius

(approach direction is encoded in spherical coordinates) as a 3D plot. The top-centre panel shows the sample

generated from the Hand Position distribution. Bottom-left shows the Wrist

Rotation layer output summed over the heading axis as a 3D plot. The

bottom-centre panel shows the parameters picked from the Wrist Rotation layer

distribution. Note that Wrist Rotation layer distribution depends on (i.e.

represents a conditional distribution) the sample picked from the Hand Position

layer. The rightmost panel shows the executed grasp.. 104

Figure

6.6 Using the same LGM used for Figure 6.5, another grasp plan is generated

(left four panels). The resulting grasp is shown on the right. By comparing the

grasp plan shown on the left four panels with of Figure 6.5’s grasp plan we see

how the selection of a different approach direction (see the centre-top panels

of both figures) changed the Wrist Orientation distribution.. 105

Figure

6.7:Two very different grasp generation from the same LGM. Upper panel:

Grasping with maximum wrist extension with some pronation. Lower panel:

Grasping with maximum wrist supination and small wrist extension. Note that the

Wrist Layer probability map is the same since the approach direction was chosen

the same (the small dots in the right most panels). 105

Figure

6.8 The grasps performed after LGM learned the association of hand rotations

with the object orientation input (full vision condition). Note that the left

panel shows a bottom side grasp. All of the shown grasp configurations

satisfied grasp stability criterion.. 107

Figure

6.9 In the poor-vision case, the hand rotation neurons in LGM show the same

response for horizontal (left panel), diagonal (centre panel) and vertical

(right panel) object presentations because of the lack of axis orientation

input. 107

Figure

6.10 When LGM has access to axis orientation information the Hand Rotation

neurons represent different plans in response to horizontal (left panel),

diagonal (centre panel) and vertical (right panel) object presentations. 108

Figure

6.11 The small object presentation produced two peaks of activity in the Hand

Position layer corresponding to the probability distribution of approach

directions. The right panel shows the executed grasp when the data generation

was localized in the area pointed by the leftmost arrow. 109

Figure

6.12: A large cube was grasped by securing the object between the thumb and the

other fingers (right panel). The Hand Position layer activity is shown on the

left panel. The neuron with largest activity is marked with an arrow... 110

Figure

6.13 The largest object presentation and grasping. The Hand Position reflects a

single reach direction as indicated with an arrow... 110

Figure

6.14:The Hand Position layer activity is superimposed to demonstrate that the

maximum activity loci are separated for each object indicating selectivity

for object size. 111

Figure

6.15 The trained Learning to Grasp Model executed grasps to objects located at

nine different locations in the workspace. The grasp locations were not used in

the training. All of the grasps shown were

stable. 114

Figure

6.16 The same model used in generating Figure

6.15 was used to generate a different set

of grasps. Again all the grasps were stable. 114

Figure

6.17 The internal mechanisms of representing and generating multiple grasp

plans are shown. Solid arrows (except object encoding) denote learned

connections while empty arrows indicate data generation. The flow of operation

starts with the presentation of the object (the bottom centre) and follows the

arrows. At the top-centre, the data generation can yield multiple approach

directions. The two possible approach directions are shown creating two streams

(left column and right column), each of which yields different grasp execution

(bottom pictures of left and right column). 115

Figure

7.1 The MNS model repartitioned to show the focus of this chapter. The grey

background marks area of interest. 118

Figure

7.2 One alternative visual control structure for manipulation is shown within

the MNS framework. The mirror neurons generate feed-forward commands. 119

Figure

7.3 Another alternative visual control structure for manipulation is shown

within the MNS framework (compare with Figure 7.2). The mirror neurons generate

feedback commands. 120

Figure

7.4 The feedback and feed-forward control view of the F5 grasping circuit,

alternative I: F5mirror neurons learn to generate feed-forward command. The

desired state is assumed to be available and is converted to a correction motor

command by F5motor-only units using stochastic gradient descent. F5canonical

neurons gate the feed-forward and feedback pairs. 121

Figure

7.5. The feedback and feed-forward control view of the F5 grasp circuit,

alternative II: F5mirror neurons learn to compute the error. The error is then

converted to a correction motor command by F5motor-only units. F5canonical

generates the feed-forward command signal. 122

Figure

7.6 The schema level view of the feedback controller. The visual processing

encapsulates the process of extracting an error based on the vision of the hand

and the object. Lower Motor Centers encapsulates the functionality involved in

transforming the motor signal into actual commands sent to muscles. 125

Figure

7.7 The leaky integrator implementation of the feedback circuit that solves the

inverse kinematics problem for precision grasping. See text for the explanation.. 126

Figure

7.8 Three grasping tasks executed by the feedback circuit proposed shown on the

upper half of the figure. The change of arm/hand configuration during the

execution is illustrated by snapshots of the arm/hand. Each hand figure is

accompanied (lower half) by the error plot. The grasp execution is stopped

(success) when the sum of finger distances to their target was less than 2mms. 128

Figure

7.9.The Visual feedback circuit generating desired trajectories for the ‘lower

level motor centre’ (implemented as a PD controller) 129

Figure

7.10 The slow (2 seconds) (lower half) and fast (0.5 seconds) (upper half)

performance of the ‘visual feedback servo’ + ‘PD controller’ system is shown.

The right hand side graphs show the tracking error (of the wrist) versus time.

In the slow case, the object can be grasped but in the fast case, it is missed.. 130

Figure

7.11 The F5 mirror neurons viewed as the memory based feed-forward controller.

The arrows below the sheet of neurons indicate outputs while the arrows coming

above the sheet indicate inputs. 133

Figure

7.12 The arm configurations that were acquired during 6 object grasping actions

are shown.

Each of the superimposed configurations is represented by a unit in the

feed-forward layer. 134

Figure

7.13 The trajectory generation with feedback and feed-forward control is

illustrated for comparison with Figure 7.8 (feedback-only system). In the lower

panel the error graphs are plotted as error versus iteration. The error is the

sum of squared distances of the fingertips to their targets. The rightmost

object was not grasped in the training (a novel object/location). Thus the

system could not make use of the feed-forward signal, approximately after

iteration 25 and switched to feedback only mode, resulting in slower

positioning of the fingers on the target locations. 135

Figure

7.14 The feedback, feed-forward and lower level motor servo

and the dynamic arm was simulated all together. Upper half: The grasp lasted

0.5 seconds. Lower half: the grasp lasted 2 seconds. The fast movement error

reduced with a factor of 6 while the slow movement reduced with a factor of 10

in terms (compare with Figure

7.10) 136

Figure

7.15 The top row demonstrates two trajectory-planning examples for grasping

without obstacle. The bottom row demonstrates how new trajectories are formed

by introduction of an obstacle as a local inhibition on the feed-forward

controller units. 137

Figure

7.16 The feed-forward unit activations for four grasp observations shown as

unit versus time. Each graph consists of 157 neurons acquired during the

learning phase (the rows). The columns represent the time. 138

Figure

7.17 A mirror neuron recorded during a grasp observation. On the left the

raster plots; on the right the histogram. The recording data shown spans 2

seconds. In addition, the hand start to move approximately at time = 1 second

indicated by the vertical bar at the centre of the raster panel (Rizzolatti and

Gallese 2001) 139

Figure

7.18 Top row: Real mirror neuron recording during a precision grasp. Bottom

row: One of the feed-forward controller unit’s responses to vision of a grasping

action. In the left panels, each raster row corresponds to a trial (Poisson

spike generation for the model). The right panels show the histograms. The

rasters aligned according to the contact of the hand with the object. 140

Figure

7.19. The similarity of a real neuron and model unit is demonstrated. Left two

panels real mirror neuron rasters and histogram. Right two panels are the model

generated rasters and histogram. A slow increasing activity is observed in both

cases. 140

Figure

7.20 Left: a sharp mirror neuron activity, which could only be replicated with

our simulator by reducing the receptive field. Right: Similar response profile

obtained from one of the feed-forward module units. 141

Figure

7.21 The population activity of feed-forward units with smaller receptive

fields. The feed-forward unit we used to match the real mirror firing profile

in Figure 7.20 is marked with an ellipse. 141

Imagine

two friends chatting over a coffee table. At the instant when one of them wants

to get a sip of coffee, he effortlessly reaches and grasps his cup, shaping his

hand according to the properties of the cup. If it were a mug, he would reach

and grab the handle so as to counteract gravity; if it were a paper cup,

probably he would grasp the cup with his whole hand covering the surface of the

cup unless the coffee is too hot, forcing him to grab the cup from its outer

rim. When he starts reaching for the cup, his friend easily understands that he

wants to drink coffee even before his hand contacts the mug. Probably the way

he reaches and shapes his hand conveys the information that he is not aiming at

other objects on the table. He could possibly even infer that his coffee is hot

before he grabs it. The situation for the two is reciprocal; they switch roles

of being observer and actor as they sip their coffee.

When

considered individually each of them is engaged in two tasks -grasping

and observing. The former is a goal directed movement while the

latter is a perceptual task. The grasping task requires the integration

of information from a variety of sources (MacKenzie and Iberall 1994). The context and visual analysis of an object determines the

general grasp plan. Proprioceptive (during reach), tactile and kinesthetic

information (after contact) are used to guide the hand and arm to complete the

grasp. Thus, the task of grasping involves, at the least, the determination of

the following information:

1. How

to conform the hand to the object. Based on object properties such as the

shape, orientation and size the questions of ‘which side of the object the hand

should approach’; ‘which fingers should be engaged’, and ’what should the

appropriate wrist rotation be’ must be answered.

2. How

to control the limbs. According to the physical properties of the environment

and the biomechanics of the limbs, a control mechanism must engage muscles to

transport the hand and shape it to match the specifications given by (1).

The

former is the problem of grasp planning; the latter is the problem of grasp

execution, which involves dynamics, that is, the adjustment of forces that

the muscles must exert to achieve the specified plan. Many other information

sources are integrated to refine the reach and grasp plan. For example,

obstacle avoidance, speed, and accuracy requirements affect the reach component

while the force requirements to secure a heavy slippery object or to handle a

delicate object affect the grasp component.

The

perceptual task, in essence, does not involve determining movement related

parameters, as no movement has to be made. Nevertheless, the observer

recognizes the action even before it is complete. Thus, the observer has to

analyze the motion of the hand and its relevance to the target to determine

whether the hand approach and preshape would yield a grasping behavior. It is

interesting to note that there is some similarity in action recognition and

action generation in terms of the underlying computations.

In

fact, if one could compare the activity of observer’s brain while he is

engaged in grasping versus while he is observing his peer grasping, one would

see that the motor related regions of the observer’s brain was active in

both observation and execution. Thus, one could conclude that the observer’s

brain mirrored the action of his peer by establishing equivalence

between the observed action and his grasp plan.

The

mechanism I caricaturized above is the focus of this thesis. The

execution-observation matching system as introduced above does exist in

monkey. There is strong evidence that human brain is also endowed with similar

mechanism.

To

be precise, this thesis investigates the cortical mechanisms involved in:

1. Translation

of a visual description of an object into an appropriate grasp plan that is,

learning to make motor plans that yield grasps that are appropriate for the

target objects

2. Mapping

of observed grasp actions into internal motor representations

3. Developmental

processes shaping neural circuits to provide the functionality (1)

4. Developmental

processes of (2), that is learning to recognize observed grasp actions

based on self-executed grasps

The

thesis analyzes (human and monkey) behavior and monkey neurophysiology from a

developmental perspective, and constantly asks the questions: what is the

underlying factor that give rise to such mechanisms? How does it shape the

basic schemas of newborns into a functional form? The aim of the thesis is to

give answers to these questions via computational models that learn and adapt starting

from minimal bootstrapping behaviors. The models and the hypotheses developed

in the thesis are based on:

1. Human

infant motor development studies

2. Human

behavioral and neuroimaging studies

3. Monkey

neurophysiology and neuroanatomy studies

The

thesis also presents significant predictions that can be experimentally tested

with the hope that experimentalists will be stimulated to conduct the

experiments suggested or design new experiments to test the model predictions

and further uncover details of the cortical mechanisms of action recognition

(mirror neurons), visuomotor learning and motor planning. The results of these

experiments then would feedback into the modeling presented here, leading to

validation (or rejection) and refinements of the models developed.

The

organization of the thesis is as follows:

Chapter

II presents the basic biological background with an emphasis on the brain

areas that will be the focus of modeling. ‘Mirror Neurons’ (Dipellegrino et al. 1992; Gallese et al.

1996; Rizzolatti et al. 1996a) of the monkey premotor cortex are introduced in this chapter.

The research on locating mirror neurons in human is also reviewed in Chapter

II.

Chapter

III develops the Mirror Neuron System (MNS) model based on the

hypothesis that self-observation of grasping movements mediates the adaptation

of parietal-premotor and premotor-premotor connections. Using simulation

results, the chapter presents predictions on the timing of mirror neuron

responses (and others) and suggests neurophysiological experiments for testing

the predictions. In addition, Chapter III introduces the grand schemas of Visual

Analysis and Reach and Grasp. The simulated 3D arm/hand of the Reach

and Grasp schema is used in other chapters to graphically display the

learned grasp actions.

Chapter

IV develops a reinforcement learning based neural architecture that is

capable of representing multiple values of a variable in terms of its

probability distribution. The probability distributions are shaped through

interaction with the environment so as to reflect the reward distribution in

the environment. Chapter IV shows how layers can be connected to build

multilayer reinforcement networks that are capable of representing conditional

probability distributions. The architecture present in Chapter IV is used by

Chapter V and Chapter VI.

Chapter

V develops the Infant Learning to Grasp Model (ILGM) based on infant

literature. ILGM is a schema level behavioral model that reproduces many infant

behaviors and produces testable hypotheses through simulation results. The

notable property is that ILGM starts with a very basic repertoire of action

mimicking neonates and show how a range of grasping categories can emerge via

explorative interaction with the environment.

Chapter

VI introduces the neurophysiological Learning to Grasp Model (LGM) as a

neural level instantiation of ILGM constrained by monkey neurophysiology and

neuroanatomy. LGM replicates existing premotor cortex findings such as the

object selectivity of F5 canonical neurons and yields testable predictions

about the grasp learning circuit in monkeys. This chapter also, poses serious

questions to neurophysiologists on the assessment used to relate neuron firing

to behavior. In particular, Chapter VI argues, by simulation results and

examples from literature, that behavior-neural activity correlation is

not an appropriate measure for investigating brain mechanisms of movement planning.

The chapter suggests an experimental methodology for deciphering the neural

substrates of movement planning suitable for finding the neuron populations

that encode the true movement control variables (parameters).

Chapter

VII asks the question, ‘why do the mirror neurons exist?’ The hypothesis of

the chapter (introduced in Chapter III) is that mirror neurons evolved

initially to provide visual feedback for manual manipulative actions, and later

became effective in recognizing actions of others. Chapter VII presents a

simplified model of grasping (planar arm/hand) with increasing degrees of

complexity in its control. First, a biologically realistic stochastic gradient

following visual feedback circuit that can perform precision grips is

developed. Then a memory based neural feed-forward circuit is introduced to

augment the visual feedback servo circuit. After assessing the behavioral

properties of the integrated visual servo circuit, the chapter analyzes the

activities of individual memory units in the feed-forward circuit. The

activities are converted into spike patterns using Poisson model of neural

firing for a direct comparison with mirror neuron data. The results indicate

that some of the feed-forward units’ firing patterns are very similar to mirror

neurons’, in spite the fact that the feed-forward activity is characterized by

a grasp error map. This supports the hypothesis that mirror neuron system

initially evolved to serve as a visual servo circuit for manual manipulation.

Chapter

VIII summarizes the key results and presents the predictions with high

impact potential.

Chapter

IX concludes the thesis by pointing out some open questions and

recommending future research directions.

In this chapter, we review the literature on the brain areas

involved in the mirror neuron system functioning and the grasp related

visuomotor transformation. We present major findings from neurophysiological

and neuroanatomical (connectivity) literature to form a background for modeling

chapters. The main regions of interest are intraparietal sulcus and premotor

areas. A supplementary review of the superior temporal sulcus and other

parietal areas is included. The posterior parietal cortex is involved in

sensory-motor transformations, combining various sensory inputs and computing

representations that are used by the motor system to generate movements. In

turn, premotor cortex is involved in generating motor plans based on parietal

representations of object affordances. The superior temporal sulcus performs

visual analysis of motion and form including biological motion providing visual

input to the parietal areas.

The macaque inferior premotor cortex is located ventral from

the spur of the arcuate sulcus (see Figure

2.1) and considered to be involved in reaching and

grasping movements (Rizzolatti et al. 1988). This region has been further

partitioned into two sub-regions: F5, the rostral region, located along the

arcuate and F4, the caudal part (see Figure

2.1).

Figure 2.1 Lateral view of macaque

brain showing the areas of agranular frontal cortex and posterior parietal

cortex (adapted from Geyer et al. 2000). The

naming conventions: frontal regions, Matelli et al.(1991);

parietal regions, Pandya and Seltzer (1982)

The neurons in F4 appear to be primarily involved in the

control of proximal movements (Gentilucci et al. 1988), whereas the neurons of F5

are involved in distal control (Rizzolatti et al. 1988).

Area F5 is one of the various agranular frontal areas of

particular interest due to its complex function (Matelli 1986). In the monkey, this area

lies immediately caudal to the inferior arm of the arcuate sulcus. Stimulation

and recording experiments showed that F5 is concerned with both hand and mouth

movements. Hand movements are represented mostly in its dorsal part while mouth

movements tend to be ventrally (Rizzolatti et al. 1988). Little is known about the

functional properties of mouth neurons, however hand neurons were extensively

studied.

2.2.1.1

Motor properties

Hand neurons discharge during specific goal-related

movements such as grasping, tearing, manipulating and holding (Rizzolatti et al. 1988). Many of them are specific

for a particular type of hand movement (Rizzolatti et al. 1988). In addition, some F5 neurons

become active at the presentation of three-dimensional objects, in the absence

of any overt movement, similar to AIP neurons that become active when the

monkey fixates on a presented object. In many cases these visually triggered

discharge requires a congruence of the presented object to the grip coded by

the neuron (Murata et al. 1997a).

Rizzolatti et al. (1988) found that most F5 neuron

firings correlated with specific goal related distal motor acts rather than

with single movements made by the animal.

Using the motor acts as the classification criterion, they subdivided the

neurons into different classes such as grasping-with-the-hand-and-the-mouth,

grasping-with-the-hand and holding neurons. The discharge of many

F5 neurons depended on the way in which the hand was shaped during the motor

act. For example the three main type of neurons found by Rizzolatti et al. (1988) were precision grip, finger

prehension and whole hand prehension neurons. Furthermore, almost

all of the neurons would discharge when the action was performed with either

hand. In addition, Rizzolatti et al. (1988) reported that 20% of the

recorded neurons had visual response properties and they required

motivationally meaningful visual stimuli to be triggered. Furthermore, they

observed that, in the case of distal neurons, there was a relationship between

the type of prehension coded by the cells and the size of the stimulus

(presented object) effective in triggering the neurons. However, note that the

purely motor related neurons constitute the majority of F5 neurons (Gallese 2002).

2.2.1.2

Visual properties: canonical neurons

Murata et al. (1997a) studied the properties of

object related activity of F5 neurons. The result of their study indicates that

some F5 neurons encode object shapes in motor terms. That is, every time an

object is presented, its visual features are automatically translated into an

internal motor representation. The translation takes place whether a motor

response is required or not. Therefore, these neurons are not intention

related.

Figure 2.2 A

canonical neuron response during grasping of various objects in the dark

(left to right and top to bottom: plate, ring, cube, cylinder, cone and sphere.

The rasters and histograms are aligned with object presentation. Small grey

bars in each raster marks onset of key press, go signal, key release, onset of

object pulling, release signal, and object release, respectively. The peaks in

ring and sphere object cases correspond to the grasping of the object by the

monkey (adapted from Murata et al.

1997a)

The similarity of the AIP and F5 visual neuron responses

suggests that they may be part of a visuomotor transformation circuit. This

view is supported by the reciprocal connections between F5 and AIP (Sakata et al. 1997a). Figure 2.2 shows a canonical neuron’s response during motor

execution. To test whether the motor related activity was due to the vision of

the object, the trial was performed in the dark. The neuron was primarily

responsive for ring grasping and a lesser extend the sphere grasping. The same

neuron’s response in the object fixation, without any subsequent grasp

requirement, is shown in Figure

2.3. It is important to note that the motor preference of

the neuron is reflected in the visual fixation condition as well.

Figure 2.3 The

motor responses of the same neuron shown in Figure

2.2. The motor preference of the neuron is also carried

over to the visual preference (compare the ring and sphere histograms of both

figures) (adapted from Murata et al.

1997a)

Recording studies of the rostral part of inferior area 6

(area F5) region showed that some of the visual neurons were responsive to

action observation (Gallese et al. 1996;

Rizzolatti et al. 1996a; Dipellegrino et al. 1992). The cells with action

observation property have been located on the convexity of the bank of arcuate

sulcus.. Like other F5 neurons, mirror neurons were active when the monkey

performs a particular class of actions. However, in addition the mirror neurons

became active when the monkey observes the experimenter or another monkey

performing an action (Gallese et al. 1996;

Rizzolatti et al. 1996a; Dipellegrino et al. 1992). In most of the mirror

neurons, there was a clear relation between the coded observed and executed

action. The actions studied so far include grasping, manipulating and placing.

The congruence between the observed and executed action varied. For some of the

mirror neurons, the congruence was quite loose; for others, the general action

(e.g. grasping) and the way the action was executed (e.g. power grasp) had to

match in order to activate to neuron (Gallese et al. 1996;

Rizzolatti et al. 1996a).

An important observation was that mirror neurons required an interaction

between the experimenter and the object; the sight of the experimenter or the

object alone was not enough to trigger mirror activity. (Gallese et al. 1996;

Rizzolatti et al. 1996a)

All the neurons were studied by examining their discharge while the

experimenter performed a series of motor actions in front of the monkey. These

actions were related to food grasping and manipulation and other objects

grasping and manipulation. In order to verify whether the recorded neuron coded

specifically hand-object interactions a series of actions such as mimicking

grasping without any object, prehension actions with tools, mimicking grasp

with spatially separated object were performed. All experimenter’s actions were

repeated on different positions (e.g. left,-right, far-close). Of the 532

recorded neurons, 92 of them showed mirror property (i.e. they discharged both

when the monkey made active movements and when it observed specific meaningful

actions performed by the experimenter) (Gallese et al. 1996).

The two important aspects of the mirror neurons are (1) they

are robust, they don’t habituate and (2) the distance of the experimenter to

the monkey does not affect the response intensity of the cell. Most of the

neurons are active during observation of a single action: for example in the

study of Gallese et al. (1996). 51/92 of the cells preferred

only single action; 38/92 of the cells preferred two or three actions; 3/92 of

the cells were active for both hand or mouth grasps. The motor properties of

these neurons were indistinguishable from those of other F5 neurons. They had

preference for certain actions: 60/92 cells responded when the animal performed

only a grasping action. 9/92 cells fired when the animal grasped with his

mouth. 11/92 of cells fired for both hand and mouth grasps (Gallese et al. 1996). The remaining 14 neurons had

the distribution: tearing (2), bringing to the mouth (2), manipulating (8). The

light and dark conditions were employed for 14 cells to test whether the motor

property was a result of self-hand vision. All the tested neurons confirmed

that, the discharge was not due to self-vision (Gallese et al. 1996).

Figure 2.4 Activity of a cell during

action observation (left) and action execution (right). There is no activity in

presentation of the object during both initial presentation and bringing the

tray towards the monkey. The vertical line over the histogram indicates the

hand-object contact onset. (from Gallese et al., 1996).

Figure

2.4

shows the dual response property of mirror neurons. The recorded neuron in the

figure was silent during the presentation of the object, but started firing

when the experimenter picked up the object. The neuron, interestingly, did not

fire during the time the tray was moved towards the monkey and finally it

started firing again when the monkey picked up the object.

Note that during the period when the tray was moved towards to monkey it

could predict that he would grasp the object (Gallese et al. 1996)

Figure

2.5 shows the specificity of a grasp related mirror

neuron where the experimenter performed (A) a precision grip, (B) a whole hand

prehension, and (C) mimicked a precision grip. The notable property of this

neuron is that miming the action was not effective in activating the neuron.

Figure 2.5 Visual response of a mirror

neuron. A. Precision grasp B. power grasp C. mimicking of precision grasp. The

vertical lines over the histograms indicate the hand-object contact onset.

(adapted from Gallese et al., 1996)

In most mirror neurons, there is a relationship between the

visual action they respond and the motor action they code. The mirror neurons

studied by Gallese et al. (1996) were divided into three,

according to their visuomotor congruence: strictly congruent, broadly

congruent and non-congruent. A neuron was labeled as strictly

congruent when the effective observed and executed actions match both in terms

of general action type (e.g. grasp) and in terms of how the action was executed

(e.g. power grasp). Figure 2.6 shows a strictly congruent neuron.

Figure 2.6 Example

of a strictly congruent manipulating mirror neuron: A) The experimenter

retrieved the food from a well in a tray. B) Same action, but performed by the

monkey. C) The monkey grasped a small piece of food using a precision grip. The

vertical lines over the histograms indicate the hand-object contact onset

(adapted from Gallese et al., 1996).

The number of strictly congruent neurons found was 29/92.

The number of broadly congruent neurons was 56/92 (Gallese et al. 1996). In the case of broadly

congruent neurons, there was a link between the executed action and the

observed preferred action. These neurons were further sub-classified according

to their motor strictness: If a broadly congruent neuron fired only for one

motor act (e.g. grasp) with only a single hand configuration (e.g. precision)

then it would be of the first type. On the other hand, if the neuron fired for

one motor act but the way the action was performed did not affect the firing

then it would be of the second type. The third and last type of broadly

congruent neurons appear to be activated by the goal of the observed action (Gallese et al. 1996). Finally, the neurons with no

apparent congruence were labeled as non-congruent (7/92). Figure 2.7 shows the classification of F5 neurons including the

mirror neuron types discussed.

Figure 2.7 The

classification of area F5 neurons derived from published literature (Dipellegrino et al. 1992;

Gallese 2002; Gallese et al. 1996; Murata et al. 1997a; Murata et al. 1997b;

Rizzolatti et al. 1996a; Rizzolatti and Gallese 2001). All F5 neurons fire in

response to some motor action. In addition, canonical neurons fire for object

presentation while the mirror neurons fire for action observation. The majority

of hand related F5 neurons are purely motor (Gallese 2002)(labelled as Motor Neurons

in the figure)

Fogassi et al. (1998) found that area F5 was not

the only area that had mirror neurons. The rostral part of the inferior

parietal lobule of the macaque monkey (area 7b or PF) also has neurons with

similar mirror properties. Although some neurons with strict congruence of the

executed and observed action have been found, the majority of the neurons

studied had limited congruence (similarity) or no congruence at all (Fogassi et al. 1998). 8/43 PF mirror neurons were

strictly congruent; 9/43 had low level of congruence (a similarity); and the

majority (26/43) were non-congruent (Fogassi et al. 1998). The main cortical input to

area F5 comes from the inferior parietal lobe, and in particular areas AIP and

PF (Matelli 1986). The similar properties of F5

canonical neurons with AIP neurons, and F5 mirror neurons with PF neurons,

suggests that these three areas work together for visuomotor transformation and

action recognition.

Area F4 (see Figure

2.1) is connected with area F3 and to a lesser extent, to

area F6 (Geyer et al. 2000). Area F4 projects to primary

motor cortex (F1). The main parietal input to area F4 comes from VIP (Geyer et al. 2000). In area F4 the space is

coded in body-parts-centred coordinate frame (e.g. centred on the hand) (Fogassi et al. 1996). When the body-part-moves the

coordinate system follows, but when the gaze moves the coordinate frame stays

anchored on the body-part (Fogassi et al. 1996). Many of F4 neurons fire

during reaching movements of the proximal arm but not the movements of the

distal arm. The neurons usually have somatosensory receptive fields that match

the movement direction of the limb (Gentilucci et al. 1988). It is suggested that VIP-F4

circuit transforms object locations into motor plans to reach towards them as

area F4 sends descending projections to the brain stem and spinal cord (Rizzolatti et al. 1998).

Area F2 (see Figure

2.1) neurons can be grouped into three different classes:

(1) signal related neurons, (2) set-related neurons, and (3) movement-related

neurons. (see Geyer et al. 2000

for a review).

Signal related neurons are activated right after visual instruction stimuli and

have phasic response. Set-related neurons show sustained activity after the

instruction stimulus during the delay period. Movement related neurons start

firing after the trigger signal. Area F2 receives somatosensory input from

areas PEip and PEc, and visual input from areas MIP and V6A. Rizzolatti et al. (1998) suggested that F2 can use the

MIP and V6A inputs in controlling arm position during the transport of the hand

to spatial targets.

Figure 2.8 The macaque parieto-frontal

projections from mesial parietal cortex, medial bank of the intraparietal

sulcus and the surface of the superior parietal lobule (adapted from Rizzolatti et

al. 1998). Note that the Brodmann’s area 7m corresponds

to Pandya and Seltzer's (1982) area PGm

Area F7 receives inputs from area 7m (Ferraina et al. 1997a;

Ferraina et al. 1997b)

(see

Figure 2.8).

The neurons in area F7 fire in response to arm movements (Caminiti et al. 1991;

Crammond and Kalaska 1996)

or visual stimuli (Shen and Alexander

1997b).

However, in contrast to area F2, area F7 visual response does not depend on a

pending movement (di Pellegrino and Wise

1991).

It appears that the 7m-F7 circuit is important for conditional movement

selection (Geyer et al. 2000). The other projection to area

F7 is from LIP (Lewis and Van Essen

2000),

where saccade related target memory activity is represented. The neuronal

activity in LIP area can be modulated by attention and eye position (see Colby and Duhamel

1996 for a review of LIP neuron responses). Thus, LIP-F7 circuit may be

important for complex saccade control (Geyer et al. 2000).

Area F1 (see Figure

2.1) is organized somatotopically, where the body parts

that require finer movements are represented over a larger cortical surface

than the body parts that require less precision. Each neuron may contribute to

multiple spinal neuron pools. The motor parameters that are encoded by F1

neurons are usually a combination of the following physical parameters: force,

rate of change of force, joint position or the velocity of the movement (Pandya and Seltzer

1982).

However, it is possible to get meaningful physical parameters using a population

of F1 neurons. Georgopoulos et al. (Georgopoulos et al.

1982)

trained monkeys to perform radial outward reaches to a target light. Recording

over a population of primary cortex neurons they showed that each neuron fired

maximally for a direction (preferred direction), and fired less and less as the

direction deviated form the preferred direction. Given a population, the

weighted sum of the preferred direction vectors, the population vector, predicted

the monkeys reaching direction.

The subcortical input to F1 is relayed by thalamus (see Matelli et al. 1989

for the distinct nuclei projecting to F1). The corticocortical inputs

to hand area of F1 comes primarily from supplementary motor area (SMA) and to a

lesser extent from the lateral premotor cortex. The other inputs are from area

1, 2 and 5 (Ghosh et al. 1987). Approximately half of the

coriticospinal projections are formed by area F1 neurons (Dum and Strick 1991).

Area F3 is somatotopically organized where arm and leg

representations run as two oblique dorsorostral-to-ventrocaudal directions (see

Figure 2.1). In addition, area F3 has an orofacial

representation, while area F6 has only an arm representation (Luppino et al. 1991).

Areas F3 and F6 have different patterns of thalamic input

indicating that they are part of different motor loops with different functions

(Luppino et al. 1991). Cortical input to area F3

originate mainly from areas F2, F4, F5, F6 and F7, and the primary and

secondary somatosensory cortices and the posterior parietal areas PE and Peci,

and the cingulate and the primary motor cortex. On the other hand, area F6 is

mainly connected with areas F5 and F7, followed by the prefrontal and cingulate

cortex, F2, F3 and F4, and to lesser extend with the posterior areas PG, PFG

and superior temporal sulcus (Geyer et al. 2000).

In the macaques’s brain, posterior parietal cortex and the

cortex of caudal superior temporal sulcus (STS) have been subdivided into

numerous areas mainly involved in spatial analysis of the visual environment

and in the control of spatially oriented behaviour (Maioli et al. 1998). The cortex of superior

temporal sulcus (STS) contains neurons that are selective for biological motion

observation such as limb movements and full body motion. Perrett et al. (1990b) reported STS neurons that

were responsive to goal directed hand motion (Perret et al. 1990b;

Perret et al. 1990a).

PET studies showed that STS in human shows strong activation during

biologically meaningful visual stimuli (Bonda et al. 1996) including goal-directed hand

actions. In monkeys, some of the STS neurons that are triggered by biologically

meaningful stimuli have two notable properties. Firstly, these neurons show

responses to goal directed hand motion in a translation/scale/rotation

invariant way (Perret et al. 1990b;

Perret et al. 1990a).

Secondly, these neurons do not require a pictorially realistic stimulus; they respond

to point light stimuli (Perret et al. 1990b;

Perret et al. 1990a)

where the stimulus is just the movement of a small number of points. Bonda et

al. (1996) also used this kind of

stimulus -

3 lights for the arm and 2 for each finger - when they scanned the

subjects during action observation.

Based on cytoarchitectonic and connectional criteria the

inferior parietal lobule (Brodmann’s area 7) includes areas 7a, 7b and 7ip (Cavada and

Goldman-Rakic 1989).

Area 7 reaches its highest development in primates (Cavada and

Goldman-Rakic 1989).

Damage to this area can cause impairments in spatial perception, neglect of

sensory stimuli contralateral to the damage side, defects in visually guided

reaching and occulomotor control (Ratcliff 1991; Stein

1991). Cavada & Goldman-Rakic (1989) divides

area 7 in sub-areas of 7m, 7a, 7b, and 7ip. Area 7m is located on the medial

surface of the hemisphere. This corresponds to Pandya and Seltzer's (1982) area PGm. Areas 7a, 7b lie on

the convexity of the posterior parietal lobule (Cavada and Goldman-Rakic

1989).

These regions correspond to Pandya and Seltzer's (1982) PG and PF respectively .

Pandya and Seltzer's (1982) also distinguish the

subdivisions of PGop and PFop in the lateral opercular part of PG and PF and

area Opt in caudal PG. Area 7ip is situated in the posterior bank of

intraparietal sulcus and referred as POa by Pandya and Seltzer (1982). In addition, the posterior

half of 7ip corresponds to functionally defined areas VIP (Maunsell & Van

Essen, 1983) and LIP (Andersen et al.,

1985). Figure

2.9 shows the intraparietal sulcus (opened) and neighbouring parietal regions

using Pandya and Seltzer (1982) nomenclature.

The anterior part of the lateral bank of the intraparietal

sulcus (area AIP) (see Figure

2.9) is involved in extracting visual properties of

objects relevant for grasping (Sakata et al. 1997a;

Sakata et al. 1998; Sakata et al. 1995; Murata et al. 1996).

Figure 2.9 The

intraparietal sulcus opened to show the anatomical location of AIP in the

macaque (adapted from Geyer et al.

2000)

Neurons in area AIP are active either in relation to the

grasping behavior alolne or in relation to the vision of objects (Sakata et al. 1998;

Sakata et al. 1997b; Taira et al. 1990). Some of the latter type are

active exclusively for visual fixation. In one study, 21% of cells studied

responded to simply fixating an object (visual-related), others (37%) were

active only when a movement is being made to manipulate the object